Exploring the Key Pillars of Software Engineering — Reliability, Scalability, and Maintainability

Have you ever wondered how software systems work flawlessly, even under heavy usage? Or how they can adapt and grow with increasing demands? And what about making changes to the software without breaking everything? Well, these are the three pillars we’ll explore today: reliability, scalability, and maintainability.

Why should we learn this topic:-

Building robust and reliable software: Understanding reliability helps you develop software that works correctly and consistently, ensuring that users can rely on it to perform its intended functions. By learning techniques like rigorous testing and error handling, you can create more dependable software that meets user expectations.

Handling increasing demands: As software applications gain popularity or experience growth, they need to handle increased workloads and user demands. Knowledge of scalability allows you to design and develop software systems that can scale effectively, ensuring that performance doesn’t degrade as usage grows. This skill is crucial for building applications that can handle high traffic and maintain a positive user experience.

Adapting to changing requirements: Software is rarely static, and requirements often change over time. Maintainability enables you to modify, update, and repair software efficiently. By following best practices such as code documentation, modularization, and version control, you can make future changes more manageable and cost-effective, saving time and effort.

Reliability:-

The system should continue to work correctly (performing the correct function at the desired level of performance) even in the face of hardware or software faults, and even human error.

Consider an example of Netflix, we know that whenever we will visit Netflix it will be available & we will be able to watch awesome shows & movies from the comfort of our homes without any issues.

Similarly, take the example of our banks, we know for sure that most of the time we can do a UPI payment & it will work. Also if we are not debiting or crediting an extra amount in the account the balance will stay the same. It will not vanish in thin air. So these are some examples of reliable systems.

Now in the case of Netflix, there are hundreds of services that help in serving the traffic. To make a system reliable the engineers should think about which are the different errors that can occur in our system & how to handle them.

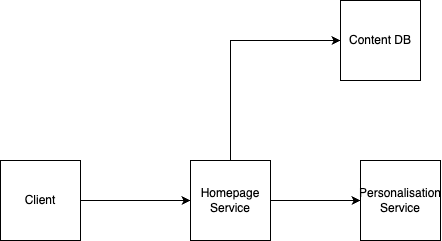

Now let’s see how we can convert an unreliable system reliable. So consider the below example which is a basic version of the architecture of Netflix:-

There is a client making a request to the homepage server. The homepage server fetches the content from the DB & calls the personalization server to personalize the content for the user. Now what will happen if the personalization server fails? The failure can be for any reason such as hardware failure in the ec2 instance hosting the server or the entire aws region going down where the service is hosted etc.

If the system is poorly built & not handling errors properly then our homepage will not load as we will not get any from personalization service. Instead of this happening our homepage service should handle the error & send the un-personalized data to the user. In this case, our data won’t be personalized but at least we are showing something to the user & user will be able to watch the content the shows that he/she likes. This is one of the reasons why you should think about reliability from the ground up while building the system.

Similarly, we can avoid the personalization service to go down by hosting it in multiple AWS regions, so that even if the machine/region goes down our service will still be up. Obviously, we cannot guarantee a 100% uptime for any service/component but we can reach closer to it & in case of any failure of a particular service, we should try to handle that error in the best possible way.

The failure which we have discussed above is categorized as software failure. There are mainly 3 causes of failures:-

Hardware Failure:- Machines going down, disk failures, aws regions outage.

Software Failure:- Bad handling of error/corner cases, Slowing down of service due to increased load, etc.

Human Error:- Not using software the way it was designed to use.

As engineers, we should try to reduce the possibility of errors in all of the above cases.

Scalability:-

Even if a system is working reliably today, that doesn’t mean it will necessarily work reliably in the future. One common reason for degradation is increased load: perhaps the system has grown from 10,000 concurrent users to 100,000 concurrent users, or from 1 million to 10 million. Perhaps it is processing much larger volumes of data than it did before. Scalability is the ability of a software system to handle increased workloads and growing demands without sacrificing performance.

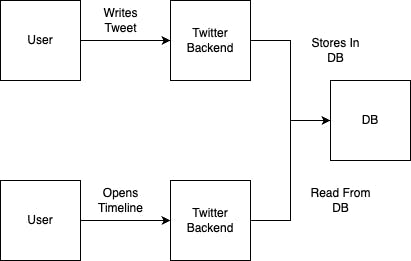

Let us consider the example of Twitter:-

There are 2 main operations on Twitter.

Writing tweets (6k req/sec on average)

View timeline — A user can view tweets posted by the people he follows (300k requests/sec)

There are 2 ways to handle the reading & writing operation:-

Writing & Reading from central storage:- Whenever someone writes a tweet we will store that tweet in the database. When someone requests the timeline content, we will go through all the users that the requesting user follows & fetch their latest tweets & show them to the user.

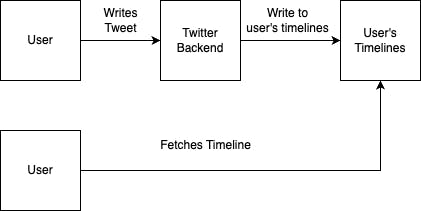

Maintain a cache of each user’s home timeline. Whenever someone writes a tweet, the system will write that tweet in all of the follower’s timelines of that user.

The benefit of approach 2 is that writing is happening a lot lesser than reading, so it is important that we reduce the time of reading rather than writing. So in the 2nd approach, we are not querying our DB to fetch tweets, although writing is much slower now as posting of tweets happens a lot lesser than reading, it is a fine approach. The first version of Twitter used approach 1, but as the users grew, they shifted to the 2nd approach.

The above example shows us that to make systems scalable we need to understand how much load the current system can handle. To understand this there are various parameters which you can measure. Below are 2 of the most commonly used parameters:-

Throughput:- If you have a job or a batch processing system, you can measure it in terms of throughput. Suppose you have written a cron job that gets executed in 5 seconds & which processes 5 GB of data, then the throughput of that system is 1GB/s.

Response time:- The web application’s performance is generally measured using the response time. Response time is nothing but how much time an API takes to respond back to the user. Now response time varies a lot per request, it could be due to network delays, caching issues, DB having too much load during that time, etc. So to measure response times we generally use percentiles. Suppose someone says your API has a p50 (50 percentile) of 2ms. That means your API has returned all 50% of the requests in lesser than 2ms. People generally measure p95 (95 Percentile), p99 (99 Percentile), and p999 (99.99 percentile).

Every system is designed to handle x amount of requests at a time, no system can handle an infinite number of requests without changing its fundamental architecture. While designing systems you shouldn’t aim at designing for a large number of users which will or will not come in the future. Instead, you should take an approach to design a system that will work for the next 3–5 years.

Maintainability:-

Every software has the tendency to change. The features which you have written for the software may or may not exist tomorrow. There may be a new requirement that has come which you have to implement in your software. Maintainability refers to the ease with which software can be modified, updated, and repaired over time.

Maintainability has 3 important parts:-

Operability:- Make it easy for operations teams to keep the system running smoothly. To make any system operable, make sure that you design it in such a way that the operations team should be able to debug & fix issues that are coming. Establishing good practices and tools for deployment, configuration management, and more

Simplicity:- Make it easy for new engineers to understand the system, by removing as much complexity as possible from the system. Keep the design of the system simple to avoid confusion. Keep a set of practices & design decisions documented somewhere.

Evolvability:- Make it easy for engineers to make changes to the system in the future, adapting it for unanticipated use cases as requirements change. Having TDD always helps in adding new functionality without breaking the old code. Make sure you have written good utility functions which can be reused again & again.

Hope you have understood all the 3 important aspects of building good software. If you have any suggestions feel free to add them in the comments below. Please clap if you liked the content. Do follow me on my YouTube channel where I am publishing a lot of great content.